Student research explores AI privacy

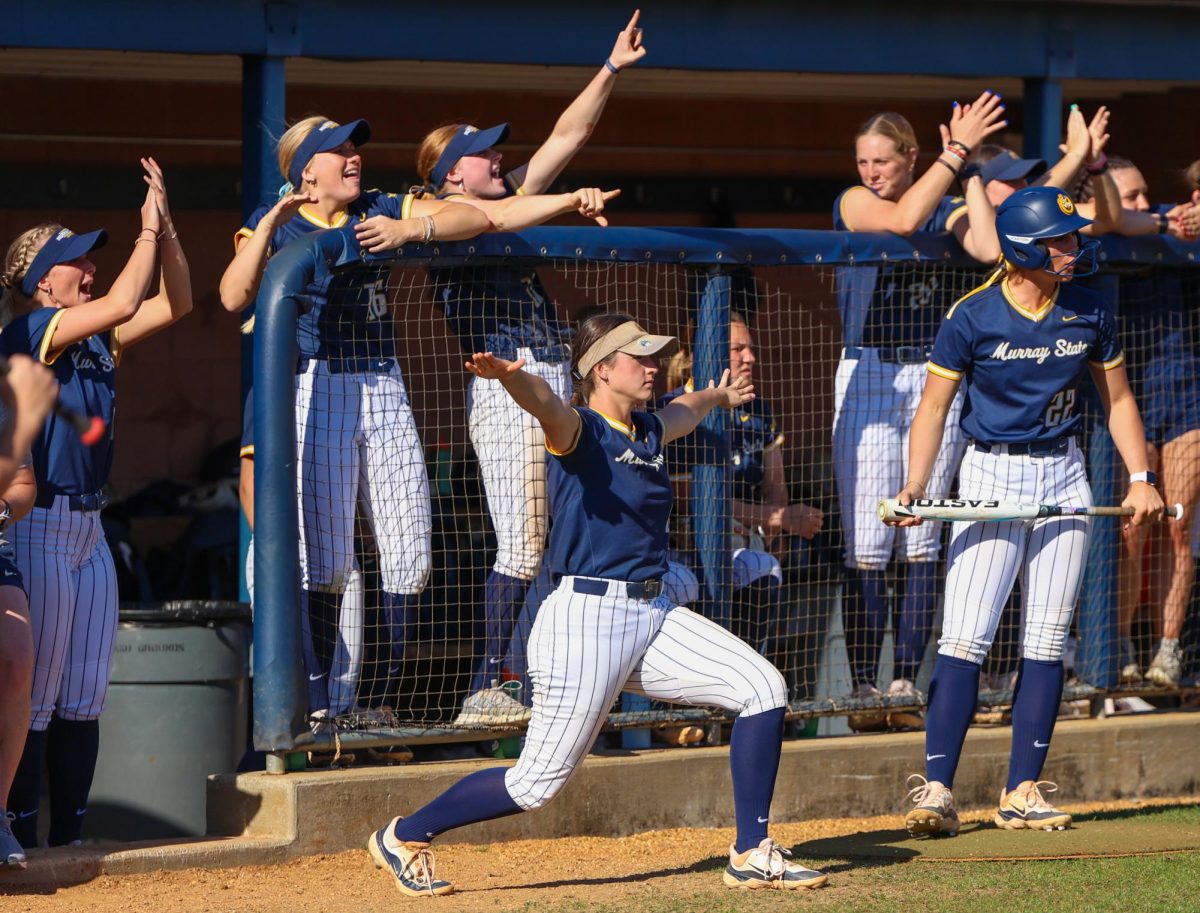

Student researchers focus on smart home device privacy, data security and AI. (Rebeca Mertins Chiodini/The News)

May 4, 2023

Under the supervision of Cybersecurity and Network Management Professors Randall Joyce and Faris Sahawneh, three students are conducting research on smart home devices and their implications regarding privacy and data security.

Smart home devices such as the Amazon Alexa and Google Home have grown prevalent in U.S. households, offering conveniences like voice-controlled assistants, home automation and music playback. But, the ability of these devices to listen, record and store audio data has sparked debates on users’ privacy and the potential for misuse by manufacturers or unauthorized third parties.

The students—senior CNM majors Will Hudson and Zach Coplea and math major Mitch Pierson—have recorded over 140 hours of TV shows using an array of devices.

Sahawneh said the research aims to provide a public service to the campus and wider community by educating them on how to guard privacy while using these devices.

“We are analyzing the data to see what trigger words would turn those speakers on and whether this [artificial intelligence] technology is biased,” Sahawneh said.

Specifically, Coplea said, the team checks for misactivations with the trigger words.

“With some devices, it’s ‘Alexa,’ ‘Echo,’ ‘Computer,’ ‘Ziggy,’ stuff like that,” Coplea said. “If we find a TV show that we play through speakers accidentally triggers one of the devices, we are monitoring the network traffic from those devices to see if we can find that activation.”

The students played TV shows like “SpongeBob SquarePants,” “The Big Bang Theory,” “The X-Files,” “The Golden Girls,” “Frasier” and more. Researchers included a diverse range of shows from different countries. They showed particular interest in the TV show “Lost,” which incorporates multiple accents and languages.

In the preliminary part of the investigation, the group used Google Translate to say words in different accents.

“You could type a word that’s not the wake word at all, but it sounds like the wake word, and that’ll activate it,” Hudson said. “I think there’s some leeway they give the smart speaker in order to understand the different accents that allows it to be triggered on accident more often.”

Hudson said he is concerned about user privacy issues with smart home devices.

“You may have run into a situation where you’re talking about something on the phone, or you see an advertisement pop up for that,” Hudson said. “That, for me, is definitely a confirmation that they’re listening when they shouldn’t be.”

Coplea said it is obvious the devices are always listening.

“In order for them to know when the trigger word is said, they always have to keep listening for it to happen,” Coplea said. “What happens with that data when it’s not the trigger word? That’s a concern. Where does it get sent? Does it just get deleted upon receiving it?”

Amazon devices allow users to view the audio data sent to and from its servers, which Hudson said reveals a great deal of traffic.

“Not to mention…sometimes, if you asked the smart speaker a question without saying the wake word, it might even tell you, ‘You didn’t say the wake word. Say it again with the wake word.’”

An NBC News story suggested the Amazon Alexa could be key to solving a murder, which Coplea found revealing.

“It could have actually recorded the entire murder that was happening in front of the device,” Coplea said. “The fact that police could think that’s a point of interest in terms of getting information from it, that’s another indicator that maybe the privacy of individuals on these devices aren’t the most well-kept.”

While Coplea acknowledged AI represents a technological revolution, he said it can be scary.

“It seems exponential, the increase in how advanced it’s getting,” Coplea said. “I mean, ChatGPT just came out last December. Microsoft invested…several billions of dollars.”

Coplea said society will have to be responsible with AI as it continues to develop.

“We’re also going to have to be careful with who owns the chatbots,” Coplea said. “Should it be something that private corporations should be in charge of solely, or should it be more open-sourced? …Some people might be concerned with political biases or ideological biases that might be in the code itself.”

The team aims to publish the research later this winter or by spring 2024 in the Institute of Electrical and Electronics Engineers Security & Privacy journal.